How To Avoid SSR Load Issues in Node.js

In my experience consulting the past few years, one thing has become clear: everyone struggles with scaling their SSR apps, no matter the chosen framework. SSR is expensive — running a lot of code intended for the browser, on the server, to pre-generate markup. Lately, web frameworks have been making strides in improving things, Solid.js being a great example of fast, performant SSR. But still, SSR can be expensive enough that if you can't get it to run fast enough, you might be better off resorting to client-side rendering.

If your SSR takes longer than 200ms (I'm generous), it's better to render on the client.

— Matteo Collina (@matteocollina) May 9, 2022

And that's the main consideration you should have: if you can avoid SSR, avoid it.

There are two ways you can avoid SSR:

- Static Site Generation — just build and statically deploy your app. It can be an SPA, or a MPA. It doesn't matter. You'll leave the rendering to the browser; either way, no matter if it's running JavaScript or instantly rendering statically generated HTML, you'll be sparing the server from it. This is the most convenient and cheapest way to avoid SSR. For the application's server-side needs, use an external data-only API. Nearly every modern full stack framework supports SSG.

- Incremental SSG — this is something reasonably well supported in Next.js, and it's also part of fastify-vite's roadmap. Particularly relevant if your rebuilds take too long and you need to be able to quickly push new pages live. It can be hard to implement in practice, but a very effective solution.

SSR is the slowest way to do templating — that's what it is, essentially, templating HTML pages that will load and boot up on the client, where rendering will take over. It's just incredibly convenient to reuse all the client code that does the rendering on the server as well. In this article, I'll talk about a few things that can be done to easily to improve the performance of run-of-the-mill SSR applications, not limited to Next.js, but rather any framework.

Our sample application

I chose with-redis from Next.js' excellent set of examples. This is a simple voting app, where users get to add feature requests that other users can upvote. There's only one page powered by a few API endpoints.

The project structure is as follows:

├── pages/

│ ├── _app.tsx

│ ├── index.tsx

│ └── api/

│ ├── create.ts

│ ├── features.ts

│ ├── subscribe.ts

│ └── vote.ts

└── lib/

└── redis.ts

Both the API (server-only) routes and the SSR-enabled React index route module reuse the same Redis instance provided by lib/redis.ts.

It is a simple yet sufficiently complex application to work with and explore some interesting considerations. If you want to follow through this article playing with the code, first checkout the repository:

git clone https://github.com/nodesource/troubled-ssr-app.git

Initial load test

Before we get started, let's see how this app is doing now. I ran the app using N|Solid so I could easily have access to a bunch of different metrics and also so that I could easily generate CPU profiles and heap snapshots.

To connect N|Solid to the server collecting metrics, I added my N|Solid SaaS token to package.json:

"nsolid": {

"saas": "<nsolid-saas-token>"

}

Then, I built a production bundle and autocannoned it:

nsolid node_modules/.bin/next build

nsolid node_modules/.bin/next start

autocannon -d 10 -c 500 http://0.0.0.0:3000

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max |

| Latency | 320 ms | 723 ms | 969 ms | 1109 ms | 606.64 ms | 216.28 ms | 1764 ms |

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max | Min |

| Req/Sec | 874 | 1270 | 1494 | 1494 | 1263.3 | 161.41 | 1494 | 874 |

| Bytes/Sec | 5.12 MB | 7.44 MB | 8.76 MB | 8.76 MB | 7.4 MB | 946 kB | 8.75 MB | 5.12 MB |

13k requests in 10.18s, 74 MB read

1109 ms for P99 latency is not great, also not terrible. But pretty bad. At this stage, this application is better off delegating rendering to the browser for scaling.

Making things worse

SSR performance issues typically fall into two categories: data fetching and rendering. Either the data fetching takes too long, or the route component is too complex, rendering it takes a lot of CPU, which blocks the Node.js event loop while running. To illustrate how a typically troubled SSR application can behave, let's create a problem where the process starts using more memory than it needs to. Sometimes this can lead to a serious memory leak that ultimately renders your process unresponsive.

In lib/redis.ts, let's do:

import Redis from 'ioredis'

+ import { setTimeout } from 'node:timers/promises'

- const redis = new Redis(process.env.REDIS_URL)

+ const redis = () => {

+ if (Math.random() > 0.5) {

+ await setTimeout(3000)

+ }

+ return new Redis(process.env.REDIS_URL)

+ }

export default redis

Then, everywhere redis is used, let's change it to a function call. This will cause a new Redis client instance to be created every time the function is called, thus increasing memory usage by creating too many file descriptors.

The condition will also introduce a random slowdown to every request. This mimics very closely what the situation can be for many SSR applications in production that have no control over the latency, availability, and performance of the external services they rely on.

Watching it blow up

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max |

| Latency | 4 ms | 3009 ms | 6522 ms | 6816 ms | 2127.52 ms | 1887.29 ms | 6950 ms |

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max | Min |

| Req/Sec | 0 | 255 | 586 | 586 | 276.2 | 222.53 | 586 | 92 |

| Bytes/Sec | 0 B | 1.22 MB | 2.81 MB | 2.81 MB | 1.32 MB | 1.07 MB | 2.8 MB | 440 kB |

4k requests in 10.17s, 13.2 MB read

That's pretty bad now — and not only that, we now cause the Redis server to become unresponsive for having way too many connections:

(nsolid:25983) MaxListenersExceededWarning: Possible EventEmitter memory leak detected. 11 server/ listeners added to [EventEmitter]. Use emitter.setMaxListeners() to increase limit

[ioredis] Unhandled error event: Error: connect ECONNRESET 127.0.0.1:6379

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1187:16)

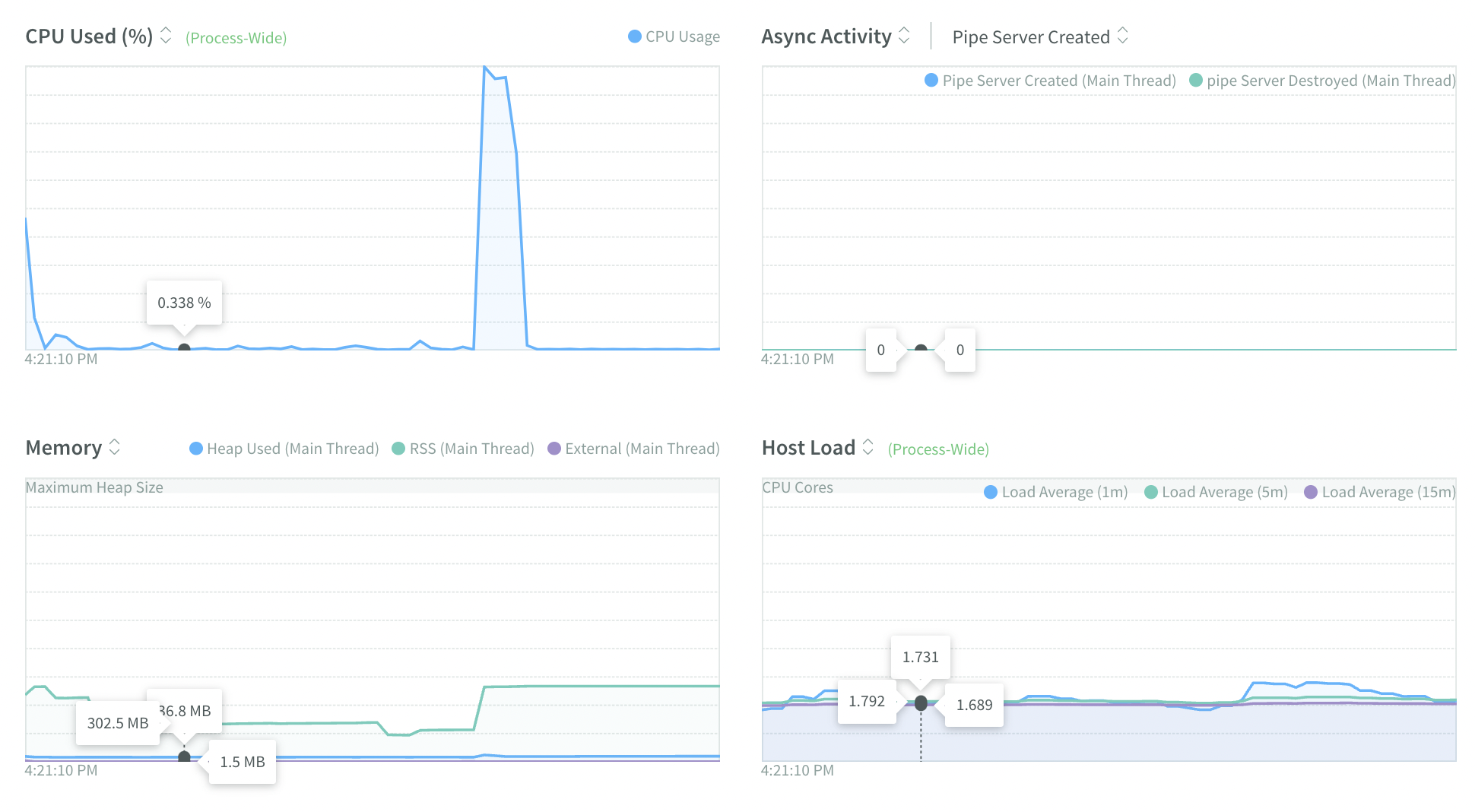

N|Solid Console shows the massive memory consumption:

Taking control

One of the first things I do when debugging SSR applications built in a specialized framework is to see if they have a Fastify plugin. Running SSR from a Fastify server you control gives you access to hooks, and allows you to implement your API routes leveraging all of Fastify's features and performance benefits.

const fastify = require('fastify')()

const fastifyNext = require('@fastify/nextjs')

async function main () {

await fastify.register(fastifyNext, {

dev: process.argv.includes('dev')

})

fastify.next('/*')

await fastify.listen({ port: 3000 })

console.log('Server listening on http://localhost:3000')

}

main()

Adding a cache layer

Adding cache to SSR is the first thing to consider, if going static is not an option. The logic is simple: if you're serving a large public page that gets updated every hour, and your users get a freshly rendered version every second, you're wasting server resources. Well, now that we have a Fastify server, adding a cache layer to our SSR should be easy, right?

+ const ssrCache = {}

async function main () {

+ fastify.addHook('onRequest', (req, reply, done) => {

+ if (ssrCache[req.url]) {

+ console.log('Sending SSR cache for', req.url)

+ reply.type('text/html')

+ reply.send(ssrCache[req.url])

+ }

+ done()

+ })

+ fastify.addHook('onSend', (req, reply, payload, done) => {

+ if (!ssrCache[req.url]) {

+ console.log('Storing SSR cache for', req.url)

+ ssrCache[req.url] = payload

+ }

+ done()

+ })

await fastify.register(fastifyNext, { dev: true })

fastify.next('/*')

await fastify.listen({ port: 3000 })

Wrong. Since @fastify/nextjs is passing down req.raw and reply.raw to Next.js methods, which are designed to work directly with ServerResponse, they bypass Fastify's send() pipeline. There's a workaround, which is a bit costly in terms of performance, but since we're using it to prevent something even more expensive from running all the time (SSR), it's worth it.

+ function spyOnEnd (chunk) {

+ if (!ssrCache[this.req.url]) {

+ ssrCache[this.req.url] = chunk

+ }

+ this.end.call(this.reply.raw, chunk)

+ }

+

async function main () {

fastify.addHook('onRequest', (req, reply, done) => {

if (ssrCache[req.url]) {

console.log('Sending SSR cache for', req.url)

reply.type('text/html')

reply.send(ssrCache[req.url])

}

- done()

- })

- fastify.addHook('onSend', (req, reply, payload, done) => {

- if (!ssrCache[req.url]) {

- console.log('Storing SSR cache for', req.url)

- ssrCache[req.url] = payload

+ if (req.url === '/') {

+ reply.raw.end = spyOnEnd.bind({ req, reply, end: reply.raw.end })

}

done()

})

Next step is to use Redis itself for the SSR cache. For this, I'm just going to use @fastify/redis to connect to Redis, and make some tweaks to the code above:

+ const fastifyRedis = require('@fastify/redis')

function spyOnEnd (chunk) {

- if (!ssrCache[this.req.url]) {

- ssrCache[this.req.url] = chunk

- }

+ this.redis.set(this.req.url, chunk, 'ex', 60)

this.end.call(this.reply.raw, chunk)

}

async function main () {

- fastify.addHook('onRequest', (req, reply, done) => {

- if (ssrCache[req.url]) {

+ await fastify.register(fastifyRedis, { url: process.env.REDIS_URL })

+ fastify.addHook('onRequest', async (req, reply) => {

+ if (req.url !== '/') {

+ return

+ }

+ const cached = await fastify.redis.get(req.url)

+ if (cached) {

console.log('Sending SSR cache for', req.url)

reply.type('text/html')

- reply.send(ssrCache[req.url])

+ reply.send(cached)

+ } else {

+ reply.raw.end = spyOnEnd.bind({

+ req,

+ reply,

+ redis: fastify.redis,

+ end: reply.raw.end

+ })

}

- if (req.url === '/') {

- reply.raw.end = spyOnEnd.bind({ req, reply, end: reply.raw.end })

- }

- done()

})

Now we're preventing SSR from taking place on the index route at a frequency higher than 1 minute. All requests within this time window will receive markup stored in the cache, not freshly rendered through SSR.

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max |

| Latency | 85 ms | 179 ms | 272 ms | 313 ms | 158.33 ms | 55.78 ms | 329 ms |

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max | Min |

| Req/Sec | 3491 | 5031 | 5447 | 5447 | 4951.7 | 533.65 | 5445 | 3490 |

| Bytes/Sec | 19.8 MB | 28.6 MB | 30.9 MB | 30.9 MB | 28.1 MB | 3.03 MB | 30.9 MB | 19.8 MB |

50k requests in 10.12s, 281 MB read

That's better, but we're still at 313 ms for P99 latency.

Disabling SSR selectively

Another step we can take is to disable SSR selectively, for instance, unless you're serving content to search engine. Or if you detect the server is under high load, rendering should be temporarily delegated to the client. Let's see how easy it is to disable SSR and rely on CSR for a Next.js application.

First, we need to detect we're not serving a search engine or any other kind of bot agent. For that, I could find the handy isbot library from npm.

+ const isbot = require('isbot')

async function main () {

await fastify.register(fastifyRedis, { url: process.env.REDIS_URL })

fastify.addHook('onRequest', async (req, reply) => {

if (req.url !== '/') {

return

}

+ if (!isbot(req.headers['user-agent'])) {

+ reply.raw.clientOnly = true

+ return

+ }

Now unless the client is a bot, we're setting a flag on the ServerResponse object, which can be accessed from Next.js in getServerSideProps(), to let it know CSR is to take place. But Next.js doesn't take care of that by itself — we have to use the wrapper page component to selectively disable SSR for the route component.

First, in pages/index.tsx:

- import type { NextApiRequest } from 'next'

+ import type { NextApiRequest, NextApiResponse } from 'next'

+ type ModifiedNextApiResponse = NextApiResponse & {

+ clientOnly: boolean

+ }

- export async function getServerSideProps({ req }: { req: NextApiRequest }) {

+ export async function getServerSideProps({ req, res }: {

+ req: NextApiRequest,

+ res: ModifiedNextApiResponse

+ }) {

- return { props: { features, ip } }

+ return { props: { features, ip, clientOnly: res.clientOnly } }

Then in pages/_app.tsx:

+ const isServer = typeof window === 'undefined'

function MyApp({ Component, pageProps }: AppProps) {

return (

<>

- <Component {...pageProps} />

+ {pageProps.clientOnly &&

+ <div suppressHydrationWarning={true}>

+ {!isServer ? <Component {...pageProps} /> : null}

+ </div>

+ }

+ {!pageProps.clientOnly && <Component {...pageProps} />}

There's still a bit of SSR taking place, that is, some React code still needs to be executed on the server, but the above effectively prevents the route component from being rendered, which should make the server deliver the final HTML much faster for client-side rendering.

Note that it also required the route component to be wrapped in an element with the suppressHydrationWarning attribute.

Now that we're doing SSR caching and skipping SSR altogether except for search engines, performance should be much faster now, right?

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max |

| Latency | 3 ms | 1035 ms | 6128 ms | 6669 ms | 1764.85 ms | 1702.63 ms | 6753 ms |

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max | Min |

| Req/Sec | 0 | 276 | 513 | 513 | 274.9 | 181.08 | 513 | 61 |

| Bytes/Sec | 0 B | 1.31 MB | 2.45 MB | 2.45 MB | 1.31 MB | 863 kB | 2.44 MB | 291 kB |

3k requests in 10.13s, 13.1 MB read

Wait, what? Three thousand requests? 6669 ms for P99? What's going on?

if (!isbot(req.headers['user-agent'])) {

reply.raw.clientOnly = true

- return

}

As it turns out, the previous iteration completely turned off SSR caching with the return highlighted above. Removing it fixes the issues as SSR caching is enabled again, but it served to remind me that the app needed one final fix: removing the chaos I introduced when I changed lib/redis.ts.

With that in place, things are much better now:

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max |

| Latency | 26 ms | 52 ms | 90 ms | 153 ms | 47.6 ms | 25.83 ms | 588 ms |

| Stat | 2.5% | 50% | 97.9% | 99% | Avg | Stdev | Max | Min |

| Req/Sec | 6367 | 17455 | 18991 | 18991 | 16588.55 | 3397.87 | 18983 | 6364 |

| Bytes/Sec | 1.95 MB | 5.36 MB | 5.83 MB | 5.83 MB | 5.09 MB | 1.04 MB | 5.83 MB | 1.95 MB |

183k requests in 11.13s, 56 MB read

A CPU spike is still visible because — well, this was 500 concurrent requests over 10 seconds on my humble laptop, but 153 ms for P99 latency is excellent. Also notice memory usage stays well-behaved in a flatline.

Other improvements

Now, if this were an application serving millions of requests per day and hundreds of users simultaneously, I'd look into other ways of reducing the cost of running it. Perhaps the most radical solution would be to drop React itself, given there are alternatives with better SSR performance, but if that's not an option:

-

On the page itself, turn off SSR selectively for elements located below the fold. The subscription form could be rendered on the client-side only.

-

The API routes are all served by Next.js, which packs an internal server similar to connect and Express. It makes sense to migrate them over to the Fastify server, where they can benefit from fast serialization and also validation when needed.

- In

pages/api/create.ts, theuuidpackage can be replaced withcrypto.randomUUID(), now natively available in recent Node.js versions.

Beyond that, I can't really think of any other improvements outside the realm of dropping Next.js itself or going extremely low-tech. In my next article I'll rewrite this application with Fastify DX and Solid.js.