Containerizing Node.js Applications with Docker

Application containers have emerged as a powerful tool in modern software development. Lighter and more resource efficient than traditional virtual machines, containers offer IT organizations new opportunities in version control, deployment, scaling, and security.

This post will address what exactly containers are, why they are proving to be so advantageous, how people are using them, and best practices for containerizing your Node.js applications with Docker.

What’s a Container?

Put simply, containers are running instances of container images. Images are layered alternatives to virtual machine disks that allow applications to be abstracted from the environment in which they are actually being run. Container images are executable, isolated software with access to the host's resources, network, and filesystem. These images are created with their own system tools, libraries, code, runtime, and associated dependencies hardcoded. This allows for containers to be spun up irrespective of the surrounding environment. This everything-it-needs approach helps silo application concerns, providing improved systems security and a tighter scope for debugging.

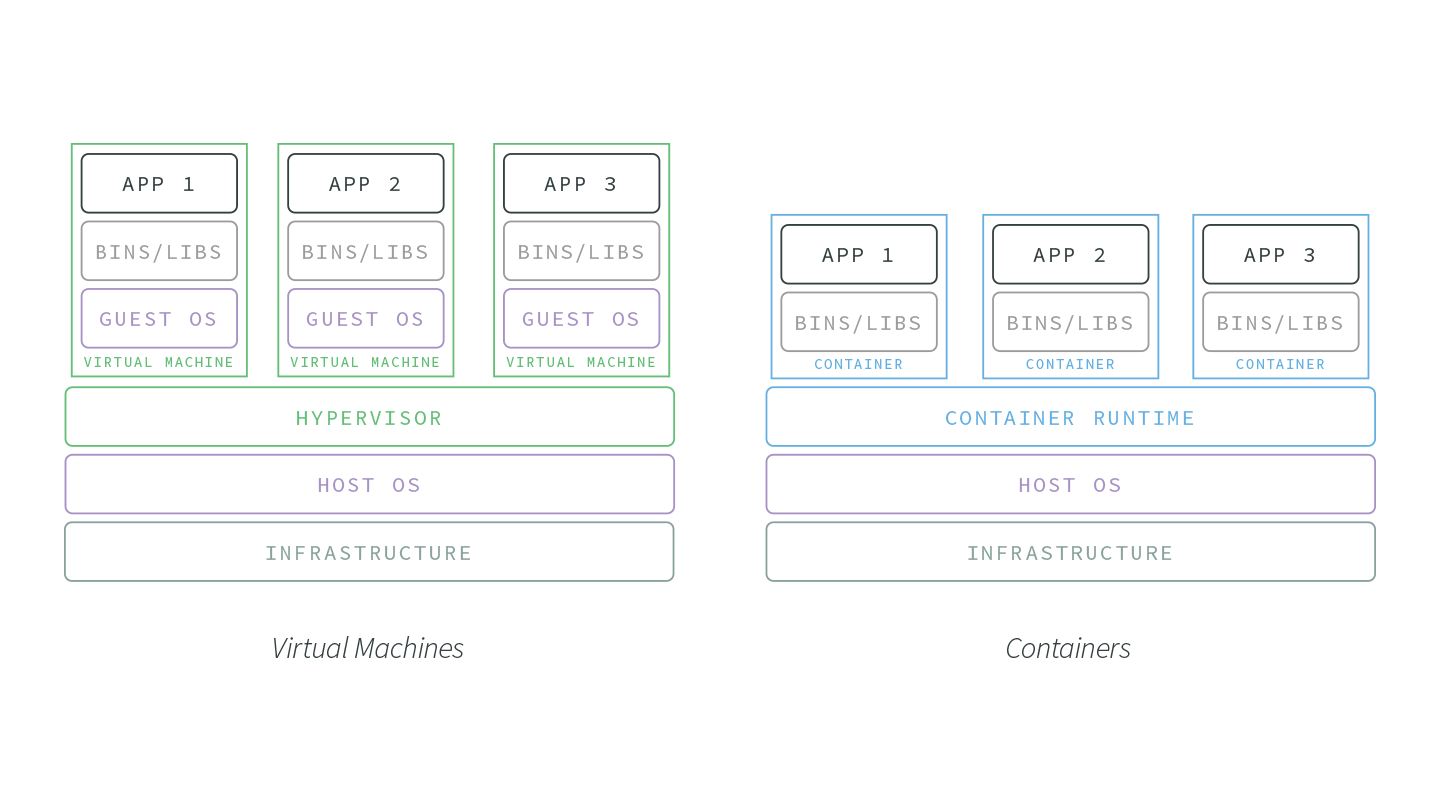

Unlike traditional virtual machines, container images give each of its instances shared access to the host operating system through a container runtime. This shared access to the host OS resources enables performance and resource efficiencies not found in other virtualization methods.

Imagine a container image that requires 500 mb. In a containerized environment, this 500 mb can be shared between hundreds of containers assuming they are are all running the same base image. VMs, on the other hand, would need that 500 mb per virtual machine. This makes containers much more suitable for horizontal scaling and resource-restricted environments.

Why Application Containers?

The lightweight and reproducible nature of containers have made them an increasingly favored option for organizations looking to develop software applications that are scalable, highly available, and version controlled.

Containers offer several key advantages to developers:

-

Lightweight and Resource Efficient. Compared to VMs, which generate copies of their host operating system for each application or process, containers have significantly less of an impact on memory, CPU usage, and disk space.

-

Immutable. Containers are generated from a single source of truth, an image. If changes are committed to an image, a new image is made. This makes container image changes easy to track, and deployment rollbacks intuitive. The reproducibility and stability of containers helps development teams avoid configuration drift, making things like version testing and mirroring development and production environments much simpler.

-

Portable. The isolated and self-reliant nature of containers makes them a great fit for applications that need to operate across a host of services, platforms, and environments. They can run on Linux, Windows, and macOS. Provide them from the cloud, on premise, or wherever your infrastructure dictates.

-

Scalable and Highly Available. Containers are easily reproducible and can be made to dynamically respond to traffic demands, with orchestration services such as Azure Container Instances, Google Cloud Engine, and Amazon ECS making it simpler than ever to generate or remove containers from your infrastructure.

Application Container Use Cases

Not all applications and organizations are going to have the same infrastructure requirements. The aforementioned benefits of containers make them particularly adept at addressing the following needs:

DevOps Organizations

For teams working to practice ‘infrastructure as code’ and seeking to embrace the DevOps paradigm, containers offer unparalleled opportunities. Their portability, resistance to configuration drift, and quick boot time make containers an excellent tool for quickly and reproducibly testing different code environments, regardless of machine or location.

Microservice and Distributed Architectures

A common phrase in microservice development is “do one thing and do it well,” and this aligns tightly with application containers. Containers offer a great way to wrap microservices and isolate them from the wider application environment. This is very useful when wanting to update specific (micro-)services of an application suite without updating the whole application.

A/B testing

Containers make it easy to roll out multiple versions of the same application. When coupled with incremental rollouts, containers can keep your application in a dynamic, responsive state to testing. Want to test a new performance feature? Spin up a new container, add some updates, route 1% of traffic to it, and collect user and performance feedback. As the changes stabilize and your team decides to apply it to the application at large, containers can make this transition smooth and efficient.

Containers and Node.js

Because of application containers suitability for focused application environments, Node.js is arguably the best runtime for containerization.

-

Explicit Dependencies. Containerized Node.js applications can lock down dependency trees, and maintain stable package.json, package-lock.json, or npm-shrinkwrap.json files.

-

Fast Boot and Restart. Containers are lightweight and boot quickly, making them a strategic pair for Node.js applications. One of the most lauded features of Node.js is its impressive startup time. This robust boot performance gets terminated processes restarted quickly and applications stabilized; containerization provides a scalable solution to maintaining this performance.

-

Scaling at the Process Level. Similar to the Node.js best practice of spinning up more processes instead of more threads, a containerized environment will scale up the number of processes by increasing the number of containers. This horizontal scaling creates redundancy and helps keep applications highly available, without the significant resource cost of a new VM per process.

Dockerizing Your Node.js Application

Docker Overview

Docker is a layered filesystem for shipping images, and allows organizations to abstract their applications away from their infrastructure.

With Docker, images are generated via a Dockerfile. This file provides configurations and commands for programmatically generating images.

Each Docker command in a Dockerfile adds a ‘layer’. The more layers, the larger the resulting container.

Here is a simple Dockerfile example:

1 FROM node:8

2

3 WORKDIR /home/nodejs/app

4

5 COPY . .

6 RUN npm install --production

7

8 CMD [“node”, “index.js”]

The FROM command designates the base image that will be used; in this case, it is the image for Node.js 8 LTS release line.

The RUN command takes bash commands as its arguments. In Line 2 we are creating a directory to place the Node.js application. Line 3 lets Docker know that the working directory for every command after line 3 is going to be the application directory.

Line 5 copies everything the current directory into the current directory of the image, which is /home/nodejs/app previously set by the WORKDIR command in like 3. On Line 6, we are setting up the production install.

Finally, on line 8, we pass Docker a command and argument to run the Node.js app inside the container.

The above example provides a basic, but ultimately problematic, Dockerfile.

In the next section we will look at some Dockerfile best practices for running Node.js in production.

Dockerfile Best Practices

Don’t Run the Application as root

Make sure the application running inside the Docker container is not being run as root.

1 FROM node:8

2

3 RUN groupadd -r nodejs && useradd -m -r -g -s /bin/bash nodejs nodejs

4

5 USER nodejs

6

7 ...

In the above example, a few lines of code have been added to the original Dockerfile example to pull down the image of the latest LTS version of Node.js, as well as add and set a new user, nodejs. This way, in the event that a vulnerability in the application is exploited, and someone manages to get into the container at the system level, at best they are user nodejs which does not have root permissions, and does not exist on the host.

Cache node_modules

Docker builds each line of a Dockerfile individually. This forms the 'layers' of the Docker image. As an image is built, Docker caches each layer.

7 ...

8 WORKDIR /home/nodejs/app

9

10 COPY package.json .

12 RUN npm install --production

13 COPY . .

14

15 CMD [“node.js”, “index.js”]

16 ...

On line 10 of the above Dockerfile, the package.json file is being copied to the working directory established on line 8. After the npm install on line 12, line 13 copies the entire current directory into the working directory (the image).

If no changes are made to your package.json, Docker won’t rebuild the npm install image layer, which can dramatically improve build times.

Setup Your Environment

It’s important to explicitly set any environmental variables that your Node.js application will be expecting to remain constant throughout the container lifecycle.

12 ...

13 COPY . .

14

15 ENV NODE_ENV production

16

17 CMD [“node.js”, “index.js”]

18

With aims of comprehensive image and container services, DockerHub “provides a centralized resource for container image discovery, distribution and change management, user and team collaboration, and workflow automation throughout the development pipeline.”

To link the Docker CLI to your DockerHub account, use

docker login:docker login [OPTIONS] [SERVER]

Private GitHub Accounts and npm Modules

Docker runs its builds inside of a sandbox, and this sandbox environment doesn’t have access to information like ssh keys or npm credentials. To bypass this constraint, there are a couple recommended options available to developers:

- Store keys and credentials on the CI/CD system. The security concerns of having sensitive credentials inside of the Docker build can be avoided entirely by never putting them in there in the first place. Instead, store them on and retrieve them from your infrastructure’s CI/CD system, and manually copy private dependencies into the image.

- Use an internal npm server. Using a tool like Verdaccio, setup an npm proxy that keeps the flow of internal modules and credentials private.

Be Explicit with Tags

Tags help differentiate between different versions of images. Tags can be used to identify builds, teams that are working on the image, and literally any other designation that is useful to an organization for managing development of and around images. If no tag is explicitly added, Docker will assign a default tag of latest after running docker build. As a tag, latestis okay in development, but can be very problematic in staging and production environments.

To avoid the problems around latest, be explicit with your build tags. Here is an example script assigning tags with environment variables for the build’s git sha, branch name, and build number, all three of which can be very useful in versioning, debugging, and deployment management:

1 # !/bin/sh

2 docker tag helloworld:latest yourorg/helloworld:$SHA1

3 docker tag helloworld:latest yourorg/helloworld:$BRANCH_NAME

4 docker tag helloworld:latest yourorg/build_$BUILD_NUM

5

Read more on tagging here.

Containers and Process Management

Containers are designed to be lightweight and map well at the process level, which helps keep process management simple: if the process exits, the container exits. However, this 1:1 mapping is an idealization that is not always maintained in practice.

As Docker containers do not come with a process manager included, add a tool for simple process management.

dumb-init from Yelp is a simple, lightweight process supervisor and init system designed to run as PID 1 inside container environments. This PID 1 designation to the dumb-init process is normally assigned to a running Linux container, and has its own kernel-signaling idiosyncrasies that complicate process management. dumb-init provides a level of abstraction that allows it to act as a signal proxy, ensuring expected process behavior.

What to Include in Your Application Containers

A principal advantage of containers is that they provide only what is needed. Keep this in mind when adding layers to your images.

Here is a checklist for what to include when building container images:

- Your application code and its dependencies.

- Necessary environment variables.

- A simple signal proxy for process management, like dumb-init.

That’s it.

Conclusion

Containers are a modern virtualization solution best-suited for infrastructures that call for efficient resource sharing, fast startup times, and rapid scaling.

Application containers are being used by DevOps organizations working to implement “infrastructure as code,” teams developing microservices and relying on distributed architectures, and QA groups leveraging strategies like A/B testing and incremental rollouts in production.

Just as the recommended approach for single-threaded Node.js is 1 process: 1 application, best practice for application containers is 1 process: 1 container. This mirrored relationship arguably makes Node.js the most suitable runtime for container development.

Docker is an open platform for developing, shipping, and running containerized applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. When using Docker with Node.js, keep in mind:

- Don’t run the application as

root - Cache

node_modules - Use your CI/CD system or an internal server to keep sensitive credentials out of the container image

- Be explicit with build tags

- Keep containers light!

One Last Thing

If you’re interested in deploying Node.js applications within Docker containers, you may be interested in N|Solid. We work to make sure Docker is a first-class citizen for enterprise users of Node.js who need insight and assurance for their Node.js deployments.

Deploying N|Solid with Docker is as simple as changing your FROM statement!

If you’d like to tune into the world of Node.js, Docker, Kubernetes, and large-scale Node.js deployments, be sure to follow us at @NodeSource on Twitter.