Why the New V8 is so Damn Fast

Many in the Node.js community were excited to see recent updates to V8, which impacted the entire V8 compiler architecture as well as large parts of the Garbage Collector. TurboFan replaced Crankshaft, and Orinoco now collects garbage in parallel, among other changes that were applied.

Node.js version 8 shipped with this new and improved version of V8, which means we can finally write idiomatic and declarative JavaScript without worrying about incurring performance overhead due to compiler shortcomings. At least, this is what the V8 team tells us.

As part of my work with NodeSource I researched these latest changes, by consulting blog articles published by the V8 team, reading the V8 source code itself, and by building tools that provide the means to verify specific performance metrics.

I collected those findings inside a github repository v8-perf to make them available to the community. They are also the basis for a talk I'll give at NodeSummit this week and a series of blog posts, starting with this one.

As the changes are complex and many, I plan to provide an introduction in this post and explore this topic in more details in future blog posts in the series. Thus, you could consider this a tl;dr of what's yet to come.

For those of you eager to learn more immediately head on over to v8-perf or consult the resources provided below.

The New V8 Compiler Pipeline

As most of you will know, previous V8 versions suffered from so-called optimizations killers that seemed impossible to fix in the engine. The V8 team also had a difficult time implementing new JavaScript language features with good performance characteristics.

The main reason for this was that the V8 architecture had become very hard to change and extend. Crankshaft, the optimizing compiler, had not been implemented with a constantly-evolving language in mind, and the lack of separation between layers in the compiler pipeline became a problem. In some extreme cases, developers had to write assembly code by hand for the four supported architectures.

The V8 team realized that this was not a sustainable system, especially with the many new language features that would need to be added as JavaScript itself evolved more rapidly. Thus, a new compiler architecture was designed from the ground up. It is split into three cleanly separated layers, the frontend, optimization layer, and backend.

The frontend is mostly responsible for the generation of bytecode run by the Ignition interpreter, while the optimization layer improves the code's performance via the TurboFan optimizing compiler. Lower level tasks, like machine-level optimization, scheduling, and generation of machine code for the supported architectures, are performed by the backend.

The separation of the backend alone resulted in about 29% less architecture-specific code even though at this point nine architectures are supported.

Smaller Performance Cliffs

The primary goals of this new V8 architecture include the following:

- smaller performance cliffs

- improved startup time

- improved baseline performance

- reduced memory usage

- support for new language features

The first three goals are related to the implementation of the Ignition interpreter, and the third goal is partially achieved via improvements in that area as well.

To start, I will focus on this part of the architecture and explain it in conjunction with those goals.

In the past the V8 team focused on the performance of optimized code and somewhat neglected that of interpreted bytecode; this resulted in steep performance cliffs, which made runtime characteristics of an application very unpredictable overall. An application could be running perfectly fine until something in the code tripped up Crankshaft, causing it to deoptimize and resulting in a huge performance degradation - in some cases, sections would execute 100x slower. To avoid falling off the cliff, developers learned how to make the optimizing compiler happy by writing Crankshaft Script.

However, it was shown that for most web pages the optimizing compiler isn't as important as is the interpreter, as code needs to run fast quickly. There is no time to warm up your code and since speculative optimizations aren't cheap, the optimizing compiler even hurt performance in some cases.

The solution was to improve the baseline performance of interpreter bytecode. This is achieved by passing the bytecode through inline-optimization stages as it is generated, resulting in highly optimized and small interpreter code which can execute the instructions and interact with rest of V8 VM in a low overhead manner.

Since the bytecode is small, memory usage was reduced as well and as it runs decently fast further optimizations can be delayed. Thus, more information can be collected via Inline Caches before an optimization is attempted, causing less overhead due to deoptimizations and re-optimizations that occur when assumptions about how the code will execute are violated.

Running bytecode instead of TurboFan optimized code won't have the detrimental effect it had in the past since it is performing closer to the optimized code; this means any performance cliff dropoffs are much smaller.

Making Sure Your Code Runs at Peak Performance

When using the new V8, writing declarative JavaScript and using good data structures and algorithms is all you need to worry about in most cases. However in hot code paths of your application you may want to ensure that it is running at peak performance.

The TurboFan optimizing compiler uses advanced techniques to make hot code run as fast as possible. These techniques include the sea of nodes approach, innovative scheduling, and many more which will be explained in future blog posts.

TurboFan relies on input type information collected via inline caches while functions run via the Ignition interpreter. Using that information it generates the best possible code handling the different types it encountered.

The fewer function input type variations the compiler has to consider, the smaller and faster the resulting code will be. Therefore, you can help TurboFan make your code fast by keeping your functions monomorphic or at least polymorphic.

- monomorphic: one input type

- polymorphic: two to four input types

- megamorphic: five or more input types

Inspecting Performance Characteristics with Deoptigate

Instead of trying to achieve peak performance blindly, I recommend first seeking some insights into how your code is handled by the optimizing compiler and inspecting the cases that result in less-optimal code.

To make that easier I created deoptigate which is designed to provide insight into optimizations, deoptimizations and mono/poly/megamorphism of your functions.

Let's look at a simple example script that I will profile with deoptigate.

I've defined two vector functions: add and subtract.

function add(v1, v2) {

return {

x: v1.x + v2.x

, y: v1.y + v2.y

, z: v1.z + v2.z

}

}

function subtract(v1, v2) {

return {

x: v1.x - v2.x

, y: v1.y - v2.y

, z: v1.z - v2.z

}

}

Next, I warm up these functions by executing them with objects of the same type (same properties assigned in the same order) in a tight loop.

const ITER = 1E3

let xsum = 0

for (let i = 0; i < ITER; i++) {

for (let j = 0; j < ITER; j++) {

xsum += add({ x: i, y: i, z: i }, { x: 1, y: 1, z: 1 }).x

xsum += subtract({ x: i, y: i, z: i }, { x: 1, y: 1, z: 1 }).x

}

}

At this point add and subtract ran hot and should have been optimized.

Now I execute them again, passing objects to add that don't have the exact same type as

before since their properties are assigned in a different order ({ y: i, x: i, z: i }).

To subtract I pass the same types of objects as before.

for (let i = 0; i < ITER; i++) {

for (let j = 0; j < ITER; j++) {

xsum += add({ y: i, x: i, z: i }, { x: 1, y: 1, z: 1 }).x

xsum += subtract({ x: i, y: i, z: i }, { x: 1, y: 1, z: 1 }).x

}

}

Let's run this code and inspect it with deoptigate.

node --trace-ic ./vector.js

deoptigate

When executing our script with the --trace-ic flag, V8 writes the information we need to an isolate-v8.log file. When deoptigate is run from the same folder it processes that file and opens an interactive visualization of the contained data.

It is a web application, so you can open it in your browser to follow along.

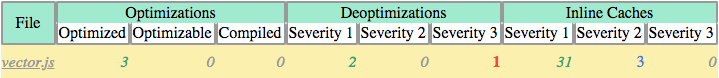

deoptigate provides us with a summary of all files, in our case just the vector.js.

For each file it shows related Optimizations, Deoptimizations and Inline Cache information. Here green means no problem, blue are minor perf issues and red are potentially major perf issue that should be investigated. We can expand the details for a file simply by clicking on its name.

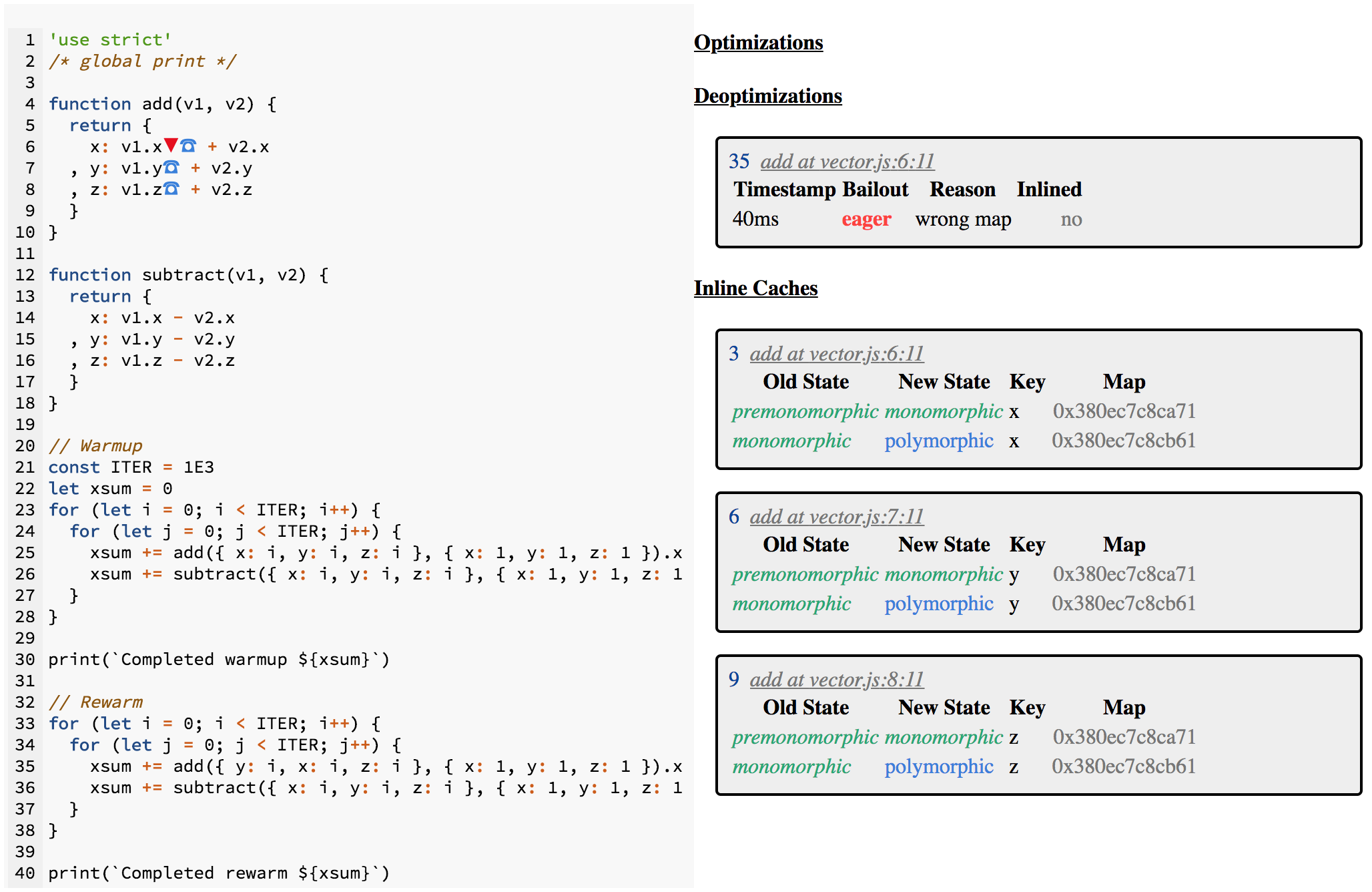

We are provided with the source of the file on the left, with annotations pointing out potential performance problems. On the right, we can learn more details about each problem. Both views function in tandem; clicking an annotation on the left highlights more details about it on the right and vice versa.

At a quick glance we can see that subtract shows no potential issues, but add does. Clicking on the red triangle in the code highlights the related deoptimization information on the right. Note the reason wrong map for the eager bailout.

Clicking on any of the blue telephone icons reveals more information. Namely, we find that the function became polymorphic. As we can see this was due to a Map mismatch as well.

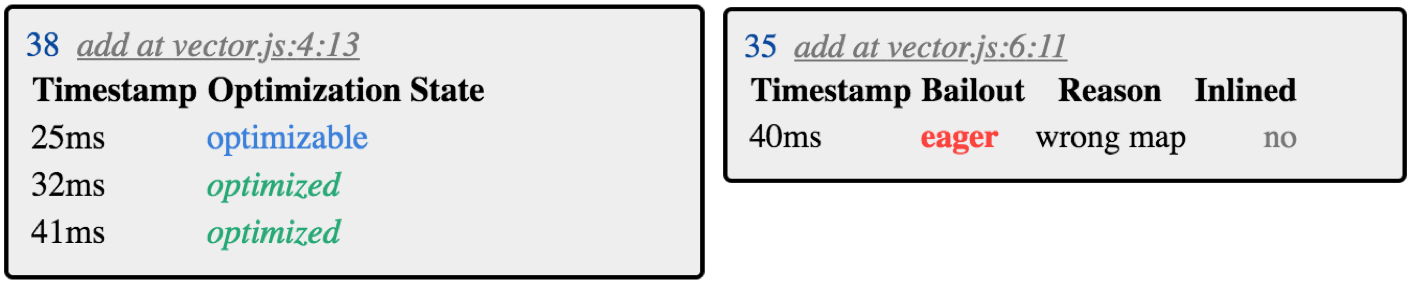

Checking Low Severities on the top of the page reveals more context regarding the deoptimization as now we are also presented with the optimizations applied to add including timestamps.

We see that add was optimized after 32ms. At around 40ms it was provided an input type that the optimized code didn't account for - hence the wrong map - and was deoptimized at which point it reverted to running Ignition bytecode while collecting more Inline Cache information. Very quickly after that at 41ms it was optimized again.

In summary, the add function executed via optimized code in the end, but that code needed to handle two types of inputs (different Maps) and thus was larger and not as optimal as before.

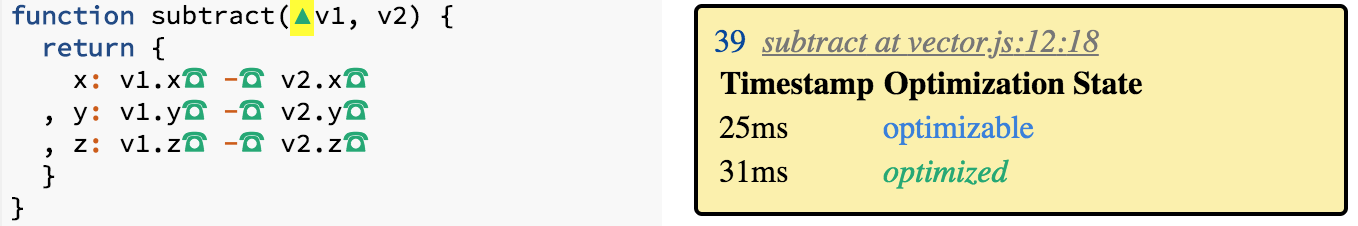

On the contrary the subtract function was only optimized once as we can verify by clicking the green pointed up triangle inside it's function signature.

Why different Maps?

Some of you may wonder why V8 considers the objects created via the { x, y, z } assignment different from the ones created via { y, x, z } given that they have the exact same properties just assigned in a different order.

This is due to the way maps are created when JavaScript objects are initialized, and is a topic for another post (I will also explain this in more detail as part of my talk at Node Summit).

So make sure to come back for more articles in this multi-part series, and if you're attending Node Summit please check out my talk Understanding Why the New V8 is so Damn Fast, One Demo at a Time on Tue, July 24th, 2:55pm at NodeSummit at the Fisher West location.

Hope to see you there!