Dive into Node.js Streams

Streams are one of the oldest and most misunderstood concepts in Node.js, and that's not a developer's issue. The streams API in Node.js could be kind of hard to understand, which could lead to misunderstandings, wrong usages, and in worst scenarios, memory leaks, performance issues, or weird behavior in your application.

Before Node.js, I used to write code using Ruby on Rails and PHP; moving to the Node.js world was complicated. Promises (then async/await), concurrency, asynchronous operations, ticks, event-loop, and more; all of them were completely new concepts for me. Dealing with unknown ideas can be just a bit overwhelming, but the success feeling of domain those and seeing how your code becomes more performant and predictable is priceless.

Before jumping into the streams, this blog post was inspired by Robert Nagy and his talk "Hardening Node Core Streams", also,I want to add some special thanks to him for his hard work in Node.js streams and more.

NodeaTLV (2021). Hardening Node Core streams - https://nsrc.io/3trQpGa

Let's dive into Node.js Streams

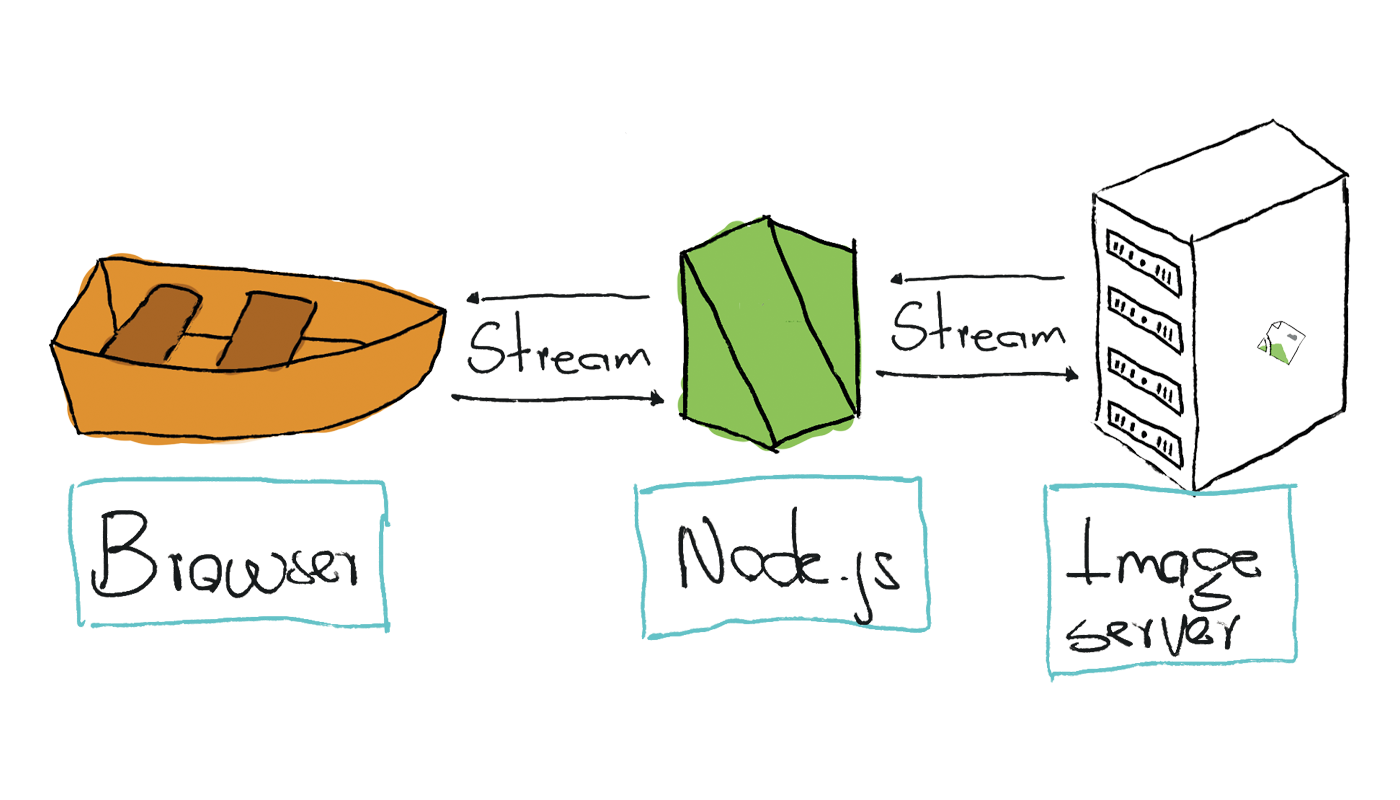

In Node.js, HTTP was developed with streaming and low latency in mind (https://nodejs.org/en/about/), which is why Node.js is considered an excellent option for HTTP servers.

Using streams in HTTP:

HTTP stands for "Hypertext Transfer Protocol," that's the internet protocol, HTTP in a significantly reduced definition, can be conceived as a client-server model protocol TCP-based, which allows servers to communicate via streams exchange.

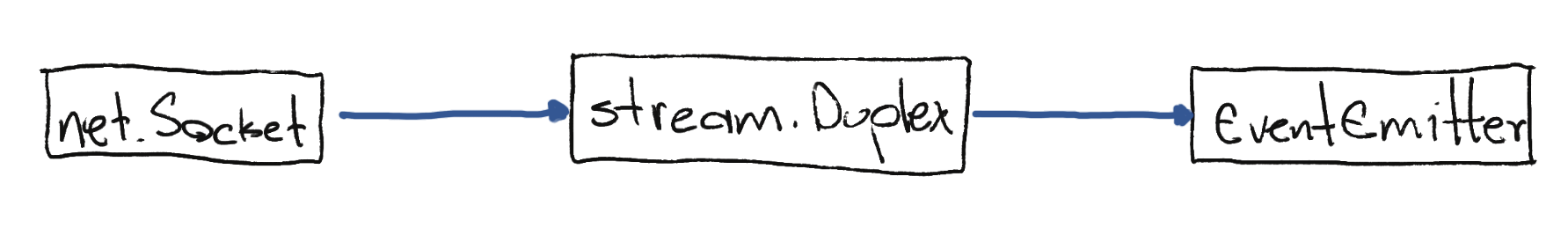

In Node.js, the TCP sockets are: net.Socket which inherints from stream.Duplex and inherits from EventEmitter.

They are more like this:

Streams are such powerful abstractions, they are complicated to be defined, but analogies can help us: "Best analogy I can think of is that a stream is a conveyor belt coming towards you or leading away from you (or sometimes both)" Took from Stack Overflow

With all that. Let's get our hands dirty!

This project pretends to take advantage of the whole inheritance tree in HTTP implementation in Node.js; the first streaming example is quite simple, and it's the beginning of HTTP v1.1 streams explanation.

const https = require('https')

const http = require('http')

const server = http.createServer(requestHandler)

const source = 'https://libuv.org/images/libuv-bg.png'

function requestHandler (clientRequest, clientResponse) {

https.request(source, handleImgResponse).end()

function handleImgResponse (imgResponseStream) {

imgResponseStream.pipe(clientResponse)

}

}

server.listen(process.env.PORT || 3000)

The code above will create a bare HTTP server that will always respond to the client with the response obtained from requesting “https://libuv.org/images/libuv-bg.png." I love that dino.

Let's examine all the components in this server.

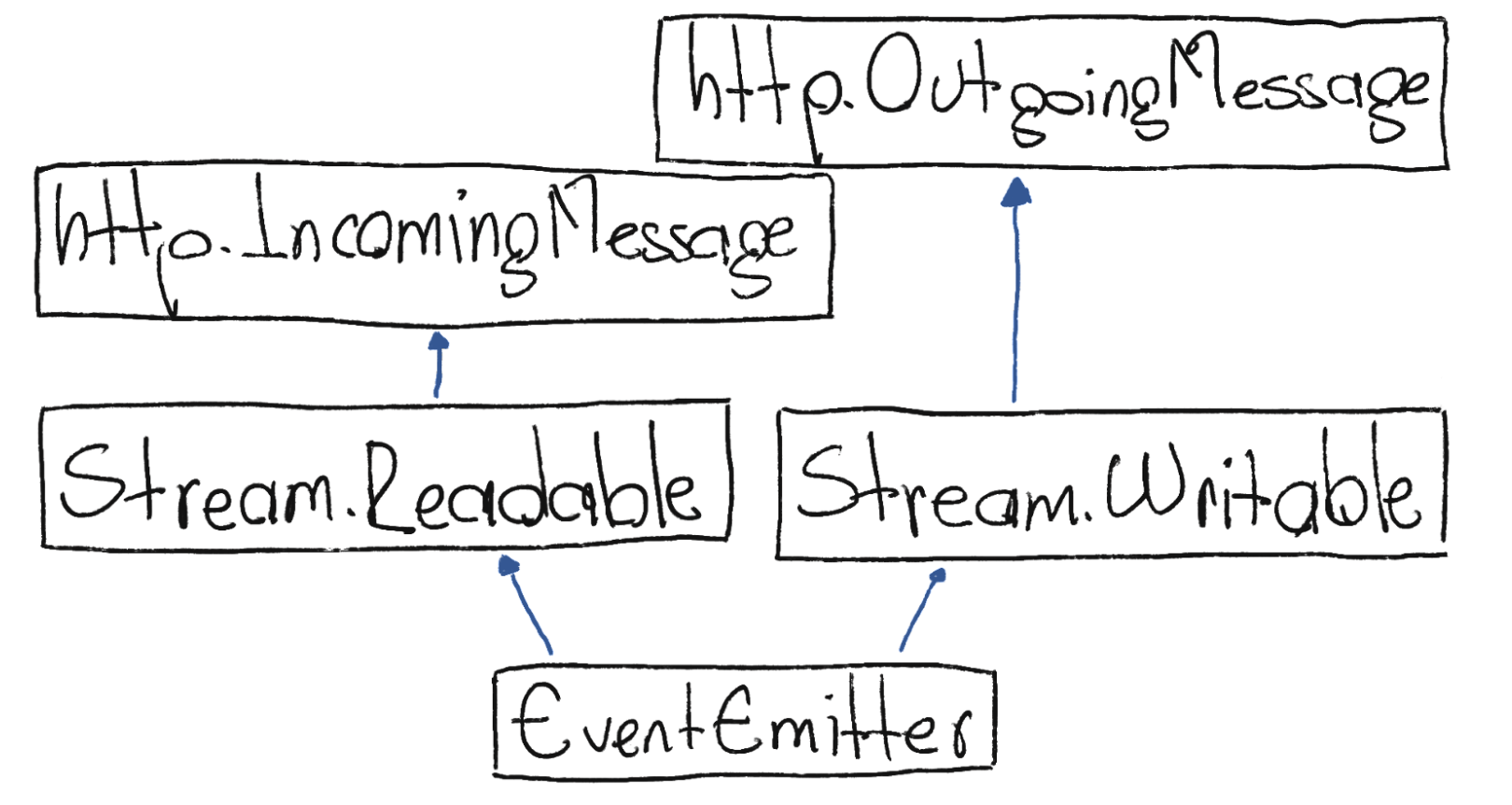

The http.IncommingMessge could be understood as the “request” object and the http.OutGoingMessage as the “response” object.

Yes! Streams in Node.js are Event emitters, isn’t that incredible?! Now let’s uncover the “stream” events:

function handleImgResponse (imgResponseStream) {

imgResponseStream

.on('data', (data) => console.log(`Chunk size: ${data.length} bytes`)) // Emmited when data is read from the stream

.on('end', () => console.log('End event emitted')) // Emitted when no more data will be read from the stream

.on('close', () => console.log('Close event emitted')) // Emitted when server closes connection (in HTTP cases)

.on('error', (error) => console.error(error)) // This could be emited at any time, and will handle any errors related with the Stream

.pipe(clientResponse)

}

What if we mess around with the event emitter?

function requestHandler (clientRequest, clientResponse) {

https.request(source, handleImgResponse).end()

function handleImgResponse (imgResponseStream) {

imgResponseStream

.on('data', function () { clientResponse.emit('error', new Error('Early abort')) })

.pipe(clientResponse)

clientResponse.on('error', function () { this.destroy() })

}

}

When the first chunk of the image is received, an error event is emitted to the client response, which will meet the client response error handler and will finish destroying the socket connection.

Or maybe emit some events in the imgRequest stream:

function requestHandler (clientRequest, clientResponse) {

const imgRequest = https.request(source, handleImgResponse).end()

function handleImgResponse (imgResponseStream) {

imgResponseStream.pipe(clientResponse)

}

imgRequest.on('response', function (imgResponseStreamAgain) {

imgResponseStreamAgain.emit('data', 'break this response')

})

}

After inspecting the response content, the server corrupted the response data; the "break this response" string is visible in the first response bytes.

00000000 62 72 65 61 6b 20 74 68 69 73 20 72 65 73 70 6f |break this respo|

00000010 6e 73 65 89 50 4e 47 0d 0a 1a 0a 00 00 00 0d 49 |nse.PNG........I|

00000020 48 44 52 00 00 03 ce 00 00 03 ce 08 06 00 00 00 |HDR.............|

00000030 …

BTW; if streams data inspection is needed, using for await instead of listening to 'data' event is recommended:

for await (const chunk of readableStream) {

console.log(chunk.toString())

}

The Writable stream events are examinable too:

function requestHandler (clientRequest, clientResponse) {

https.request(source, handleImgResponse).end()

function handleImgResponse (imgResponseStream) {

imgResponseStream

.pipe(clientResponse)

}

clientResponse

.on('pipe', () => console.log('readable stream piped')) // Emitted after register the pipe

.on('finish', () => console.log('Response write finished')) // Emitted when piped stream emits 'end'

.on('close', () => console.log('Connection with the client closed'))

}

That said. Avoid events in streams. There are many "stream-like" objects in the wild; unless it is 100% sure all dependencies follow the mentioned "event rules," there is no guarantee of solid/predictable or even compatible behavior.

The stream.finished method is the recommended way to error handling streams without using their events.

stream.finished(stream, (err) => {

if (err) {

console.error('Stream failed.', err)

}

})

Streams can be a powerful tool in Node.js, but they are pretty easy to misunderstand and could shoot yourself in the foot, use them carefully and make sure they are predictable and consistent in your whole application.

This blog post could be considered an extension of the Streams series.

We are here to help 💪

If you have any questions, please feel free to contact us at info@nodesource.com or in this form

To get the best out of Node.js, try N|Solid SaaS now! #KnowYourNode